In the study of Morphology, which is concerned with the structure of words, there has traditionally been a distinction drawn between two types of affixes, inflectional and derivational. An affix is basically what your traditional Latin or German grammars would have called an ‘ending’, though the term is more general, as it can refer to bits of words that come at the beginning (a prefix), or in the middle (an infix) or at the end (suffix) etc.

Inflection is often defined as a type of affix that distinguishes grammatical forms of the same lexeme. When we talk of lexemes in linguistics we’re usually referring to the fact that there are some word forms that differ only in their inflectional properties. So go and went are different word forms, but they belong to the same lexeme, whereas go and walk belong to different lexemes. With that in mind, let’s turn to an example of inflection. The English plural suffix -s in book-s is an inflectional suffix because it distinguishes the plural form books from the singular form book. Books and book are thus different grammatical forms of the same lexeme.

Derivation refers to an affix that indicates a change of grammatical category. Take for example the word person-al. The suffix -al does not distinguish between grammatical forms of the same lexeme: person and personal are different lexemes, and personal belongs to a different word class (i.e. it is an adjective) from person (which is obviously a noun).

That’s all well and good, but unfortunately things don’t stop there. On closer inspection it becomes clear that there are significant problems with the above definitions. First, they come with theoretical assumptions, that is, an a priori distinction between lexemes and word forms. There are theoretical implications here, as lexemes are considered to be those linguistic tokens which are stored individually in each person’s lexicon or ‘mental dictionary’, whereas anything to do with grammar is traditionally considered not to be stored there. More problematic, however, is that many affixes cannot neatly be identified as either inflection or derivation. Some seem more inflection-like than others but have derivation-like qualities too, and vice versa. This is problematic for people who believe in a dichotomous dual mechanism model, i.e. who think that grammatical information and lexical information are stored in separate components of the overall grammar.

Haspelmath (2002) discusses several more distinctions between inflection and derivation, building on the narrow definitions given above. He groups the distinctions into two categories, ‘all-or-nothing’ and ‘more-or-less’ criteria. That is, in his view, the ‘all-or-nothing’ criteria unambiguously distinguish inflection from derivation, whereas the ‘more-or-less’ do so to a lesser extent. I won’t go through every criterion as that would be tedious, but you’ll soon get a sense that there are problems with pretty much all of them.

His first ‘all-or-nothing’ criterion is basically the one we used to define our terms at the beginning: derivation indicates a change of category, whereas inflection does not. However, consider the German past participle gesungen, (‘sung’). On first glance this seems to be an example of bog-standard inflection, The circumfix ge- -en indicates that gesungen is a different grammatical form of the lexeme singen (‘to sing’) from, say, singst (‘you (sg) sing’). They are all the same category, however, as they are all verbs. However, gesungen can change category when it functions as an attributive adjective, as in (1):

1. Ein gesungen-es Lied

A sing.PP-NOM song

‘A song that is sung’

In this case, then, an example of what appears to be inflection can also change category.

Haspelmath’s (2002) third criterion is that of obligatoriness. The saying goes that inflection is ‘obligatory’, but derivation is not. For example, in (2), the right kind of inflection must be present for the sentence to be grammatical:

(2) They have *sing/*sings/*sang/sung.

By contrast, derivation is never obligatory in this sense, and is determined by syntactic context. However, some examples of inflection are not obligatory in the sense described above either. For example, the concept of number is ultimately the speaker’s choice: she can decide whether she wishes to utter the form book or books based on the discourse context. Because of this, Booij (1996) distinguishes between two types of inflection, inherent and contextual. Inherent inflection is the kind of inflection which is determined by the information a speaker wishes to convey, like the concept of number. Contextual inflection is determined by the syntactic context, as in (2). Keep this distinction in mind, we’ll come back to it!

In addition, there are problems with all of Haspelmath’s (2002) further ‘more-or-less’ criteria. I’ll take three of them here, but I’ll cover them quickly.

i. Inflection is found further from the base than derivation

Example: in personalities we have the base person, then the derivational suffixes -al and -ity before we get the inflectional suffix -s. You don’t get, e.g. *person-s-al-ity

Problem: Affect-ed-ness has the opposite ordering (i.e. inflectional suffix -ed is closer to the base than the derivational suffix -ness).

ii. Inflectional forms share the same concept as the base, derivational forms do not.

Example: person-s has same concept as person, but person-al does not.

Problem: It’s very vague! What is a ‘concept’? What about examples like German Kerl-chen (‘little tyke’)? -chen is usually considered to be an inflectional suffix, but Kerl doesn’t mean ‘tyke’, it means ‘bloke’. There is surely a change in concept here?

iii. Inflection is semantically more regular (i.e. less idiomatic) than derivation.

Example: inflectional suffixes like -s and -ed indicate obvious semantic content like ‘plural’ and ‘past tense’, but it’s not always clear what derivational suffixes like -al actually represent semantically. Derivation, such as in the Russian dnev-nik (‘diary’, lit. ‘day-book’) is more idiomatic in meaning (i.e. you can’t work out its meaning from the sum of its parts).

Problem: What about inflectional forms like sand-s, which is idiomatic in meaning? (i.e. sands does not equate with the plural of sand in the same way that books does with book.)

So, why does this matter? I alluded to the problem above. Basically, many linguists (e.g. Perlmutter (1988)) are keen to hold to a dichotomous approach to grammatical and lexical components in terms of how linguistic information is stored in the brain. They want inflection and derivation to be distinct in a speaker’s linguistic competence in accordance with the dual mechanism model, with derivation occurring in the lexicon and inflection occurring subsequent to syntactic operations. But the natural language data seem to indicate that the distinction between inflection and derivation is somewhat fuzzier.

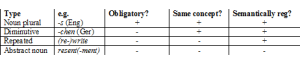

So how do people get around it? There are several ways, but I’ll outline two of them here. The first is known as the Continuum approach, advanced by scholars such as Bybee (1985). As the name suggests, this approach entails that there is a continuum between inflection and derivation. Take a look at the following table, adapted from Haspelmath (2002:79) (sorry it’s so small):

In the descending rows, the different types of inflectional/derivational affixes can be placed in an order according to how prototypically inflectional or derivational they are. For example, the -s plural suffix is prototypically more inflectional than the German diminutive suffix -chen.

But this approach can’t account for the order preference of base-derivation-inflection, which is one of the properties we discussed above. In addition, it carries with it great theoretical implications, namely that the grammar and the lexicon form a continuum. This is not the place to get into this debate, but I think there are good reasons for keeping the two distinct.

Booij (1996; 2007) comes up with a tri-partite approach to get around this problem, and it goes back to the distinction made above between inherent and contextual inflection. His approach is neat, because it attempts to account for the fuzziness of the inflection/derivation boundary while maintaining a distinction between the grammar and the lexicon. By dividing inflection/derivation phenomena into three rather than two (so derivation plus the two different types of inflection), we can account for some of the problematic phenomena we discussed above. For example, ‘inherent’ inflection can account for lack of obligatoriness in inflection when this occurs, as well as accounting for the occasional base-inflection-derivation order, when that occurs. ‘Contextual’ inflection takes care of obligatory inflection and the usual ordering of base-derivation-inflection.

There’s more to be said on this: can Booij’s tripartite approach really explain why, for example, the ordering base-derivation-inflection is so much more common than the other ordering? What about the problems with inflection that can change category such as in ein gesungenes Lied? Nevertheless, we’ve seen that a sharp distinction between inflection and derivation cannot be drawn, which has consequences for a dichotomy approach to the grammar. This dichotomy can be maintained if we follow Booij’s distinction of contextual versus inherent inflection.

References

Booij, G. 1996. Inherent versus contextual inflection and the split morphology hypothesis,

Yearbook of Morphology 1995, 1-16.

Booij, G. 2007. The Grammar of Words. An Introduction to Morphology. Oxford: OUP.

Bybee, J. 1985. Morphology. The Relation between Form and Meaning. Benjamins:

Amsterdam.

Haspelmath, M. 2002. Understanding Morphology. London: Arnold.

Perlmutter, D. M. 1988. The split morphology hypothesis: evidence from Yiddish, in M.

Hammond & M. Noonen (eds), Theoretical Morphology. San Diego, CA: Academic Press,

79-100.